7 Advanced SQL Interview Questions to Master in 2025

Welcome to the ultimate guide for developers and data professionals aiming to stand out in a competitive job market. If you're preparing for a role that demands deep database expertise, you've come to the right place. We're moving beyond basic SELECT statements to tackle the complex challenges that truly test your SQL mastery. This article is your comprehensive resource for some of the most critical advanced SQL interview questions you are likely to encounter.

This guide provides more than just answers; it offers the strategic thinking behind them. We will dive deep into sophisticated concepts with practical, real-world examples and clear, line-by-line code explanations.

You will learn how to:

- Effectively use window functions like

RANK()andDENSE_RANK(). - Optimize queries by choosing between operators like

EXISTSandIN. - Manage complex data structures using recursive Common Table Expressions (CTEs).

- Demonstrate your understanding of foundational principles like ACID properties and normalization.

By mastering these specific topics, you'll be equipped to articulate your expertise, solve complex problems under pressure, and prove you have the skills needed for a top-tier data role. Let's get started.

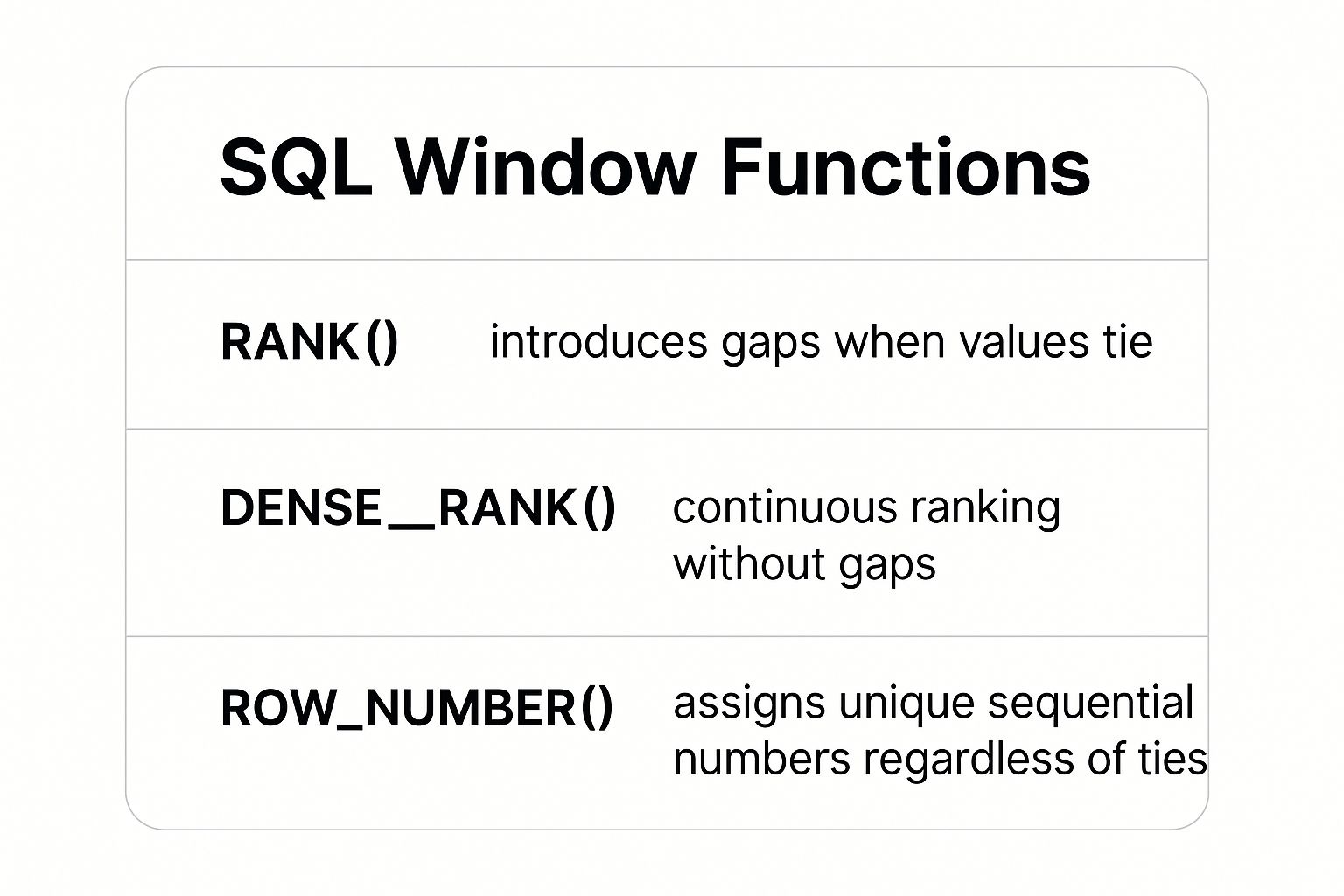

1. Explain the difference between RANK(), DENSE_RANK(), and ROW_NUMBER() window functions

This is one of the most common advanced SQL interview questions because it directly tests your understanding of window functions, which are crucial for complex analytical queries. The question assesses your ability to assign ranks or sequential numbers to rows within a partition of a result set, a frequent task in data analysis and reporting. The key is to articulate the subtle but critical differences in how RANK(), DENSE_RANK(), and ROW_NUMBER() handle tied values.

How They Work and When to Use Them

These functions all operate over a "window" of data defined by the OVER() clause, which must include an ORDER BY sub-clause. Their behavior diverges when they encounter duplicate values in the ordering column.

ROW_NUMBER(): Assigns a unique, sequential integer to each row. If two rows have the same value in theORDER BYcolumn, they will still receive different row numbers, determined arbitrarily but consistently by the database. Use this when you need a unique identifier for each row in the result set, such as for pagination or selecting the first N records.RANK(): Assigns the same rank to rows with tied values. However, it then skips the next rank(s) to account for the tie. For example, if two rows tie for 2nd place, they both get rank 2, and the next rank assigned is 4. This is the classic "Olympic-style" ranking.DENSE_RANK(): Also assigns the same rank to tied values but does not skip any ranks. If two rows tie for 2nd place, they both get rank 2, and the next rank assigned is 3. This is ideal for creating gapless leaderboards.

Example: Ranking Employee Salaries

Consider an employees table where we want to rank employees by salary within each department.

SELECT

employee_name,

department,

salary,

ROW_NUMBER() OVER(PARTITION BY department ORDER BY salary DESC) AS row_num,

RANK() OVER(PARTITION BY department ORDER BY salary DESC) AS rank_val,

DENSE_RANK() OVER(PARTITION BY department ORDER BY salary DESC) AS dense_rank_val

FROM

employees;

If the "Sales" department has two employees earning $80,000, ROW_NUMBER() would give them sequential numbers (e.g., 1 and 2), RANK() would assign them both rank 1 and skip to rank 3 for the next salary, while DENSE_RANK() would assign them both rank 1 and move to rank 2 for the next salary.

To help you visualize these differences, the following summary box breaks down the core behavior of each function.

As the reference shows, your choice depends entirely on whether you need unique numbering, traditional ranking with gaps, or a continuous ranking system. Understanding this distinction proves you can select the right tool for specific analytical tasks, a key skill for any data-focused role.

2. Write a query to find the second highest salary without using LIMIT or TOP

This is another classic in the lineup of advanced SQL interview questions. It’s designed to test your problem-solving abilities and your knowledge of core SQL concepts beyond simple filtering. By prohibiting the use of convenient but non-standard clauses like LIMIT or TOP, the interviewer forces you to demonstrate a deeper understanding of subqueries, aggregate functions, and potentially window functions. This question reveals if you can think algorithmically within the constraints of SQL.

How It Works and When to Use It

The core challenge is isolating the second-highest value from a set. This is a common requirement in tasks like identifying runner-up performers, finding second-tier customer segments, or any scenario where you need to look beyond the absolute maximum. There are several valid approaches, each with its own trade-offs. The most common methods involve using subqueries or Common Table Expressions (CTEs).

- Subquery with

MAX(): This method involves finding the overall maximum salary and then finding the maximum salary among all values that are less than the overall maximum. It’s a logical, step-by-step approach that works in almost any SQL database. - Correlated Subquery: An alternative is to use a correlated subquery to count how many distinct salaries are greater than the current salary. The row where this count is exactly one represents the second-highest salary.

- Window Functions: For modern SQL databases, using a window function like

DENSE_RANK()is often the most elegant and efficient solution. You can rank all salaries and simply select the one with a rank of 2.

Example: Finding the Second Highest Salary

Let's use the subquery approach with the MAX() function, as it's the most universally compatible solution. Consider an employees table with employee_name and salary columns.

SELECT MAX(salary) AS second_highest_salary

FROM employees

WHERE salary < (SELECT MAX(salary) FROM employees);

This query first runs the inner subquery (SELECT MAX(salary) FROM employees) to find the absolute highest salary. The outer query then finds the maximum salary from the set of all salaries that are strictly less than that absolute maximum, effectively giving you the second-highest value.

This question tests your ability to manipulate data sets without relying on database-specific shortcuts. Successfully answering it shows that you can solve complex analytical problems using fundamental SQL principles, a crucial skill for anyone in a data-centric role. Discussing the pros and cons of this method versus a DENSE_RANK() solution will further impress your interviewer.

3. Explain the difference between EXISTS and IN operators, and when to use each

This is a classic question in advanced SQL interviews because it probes your understanding of subquery logic, performance optimization, and how SQL handles NULL values. The choice between EXISTS and IN can significantly impact query performance and correctness. Answering this well shows that you think beyond just getting the right result and consider efficiency and edge cases. The key is to explain their fundamental operational differences.

How They Work and When to Use Them

Both operators are used with subqueries to filter data, but they evaluate conditions differently. IN compares a value from the outer query to a list of values from the subquery, while EXISTS simply checks if the subquery returns any rows at all.

IN: This operator is best understood as a shorthand for multipleORconditions. The database engine executes the subquery first, collects all the results into a temporary set, and then compares each row from the outer query against this set. UseINwhen you are comparing against a small, static list of values.EXISTS: This operator checks for existence. For each row in the outer query, it runs the subquery to see if it returns any rows. As soon as it finds one matching row, it stops and returnsTRUE, making it highly efficient for large subqueries. UseEXISTSwhen you only need to confirm that a related record exists, especially if the subquery returns a large volume of data.

Example: Finding Customers with Orders

Imagine you have Customers and Orders tables. You want to find all customers who have placed at least one order.

Using IN would look like this:

SELECT

customer_name

FROM

Customers

WHERE

customer_id IN (SELECT customer_id FROM Orders);

Using EXISTS is often more performant for this task:

SELECT

c.customer_name

FROM

Customers c

WHERE

EXISTS (SELECT 1 FROM Orders o WHERE o.customer_id = c.customer_id);

The EXISTS version is typically faster because the database can stop searching the Orders table for a given customer as soon as it finds the first order. In contrast, the IN subquery would have to collect all customer_ids from the entire Orders table first. A critical distinction also arises with NULLs: NOT IN will not match any rows if the subquery result set contains a NULL, whereas NOT EXISTS handles this scenario predictably.

4. Write a query to identify and remove duplicate records from a table

This is a classic and highly practical advanced SQL interview question because it simulates a common data cleansing task. Interviewers use it to evaluate your ability to combine window functions like ROW_NUMBER(), Common Table Expressions (CTEs), and Data Manipulation Language (DML) commands like DELETE. Success requires a logical, step-by-step approach to first identify duplicates based on specific criteria and then safely remove them without losing unique records.

How They Work and When to Use Them

The most robust method involves using a CTE with the ROW_NUMBER() window function. This approach allows you to assign a unique, sequential number to rows that are identical based on a set of columns you define as constituting a "duplicate." By partitioning the data on these columns and ordering by a primary key or timestamp, you can isolate the first occurrence of a record from its subsequent duplicates.

- Identification: The

ROW_NUMBER()function is used within a CTE to assign a rank to each row within a partition of duplicate records. ThePARTITION BYclause specifies the columns that define a duplicate (e.g.,email,product_id). - Isolation: The first instance of a record in each partition will get a row number of 1, while all subsequent duplicates will get numbers greater than 1 (2, 3, etc.).

- Deletion: A

DELETEstatement can then be executed on the CTE, targeting all rows where the assigned row number is greater than 1. This ensures that only one copy of each record is preserved.

Example: Deduplicating Customer Records

Imagine a customers table where duplicate entries exist for the same email address. The goal is to keep only the most recently created record for each email.

-- Step 1: Use a CTE to identify duplicates

WITH NumberedCustomers AS (

SELECT

customer_id,

email,

created_at,

ROW_NUMBER() OVER(

PARTITION BY email

ORDER BY created_at DESC

) AS rn

FROM

customers

)

-- Step 2: Delete rows where the row number is greater than 1

DELETE FROM NumberedCustomers

WHERE rn > 1;

In this query, the PARTITION BY email groups all records with the same email address. The ORDER BY created_at DESC ensures the newest record gets rn = 1. The DELETE statement then removes all other older, duplicate records. This multi-step, CTE-based approach is considered best practice because it is clear, efficient, and less error-prone than other methods. It’s a core technique for maintaining data integrity in any database.

5. Explain database normalization and write queries demonstrating 1NF, 2NF, and 3NF

This question probes your foundational knowledge of relational database design, a critical skill that impacts data integrity, efficiency, and scalability. Interviewers ask this to gauge whether you can structure data logically to eliminate redundancy and inconsistent dependencies, a core tenet of robust database architecture. Your answer reveals your understanding of the "why" behind database structure, not just the "how" of querying it.

How They Work and When to Use Them

Database normalization is the process of organizing columns and tables in a relational database to minimize data redundancy. Each "normal form" (NF) represents a progressively stricter set of rules.

- First Normal Form (1NF): Ensures that a table's columns hold atomic values (no repeating groups or multi-valued fields) and each row is unique, identified by a primary key. This is the most basic level of organization.

- Second Normal Form (2NF): Requires the table to be in 1NF and all non-key attributes to be fully functionally dependent on the entire primary key. This rule applies to tables with composite primary keys and prevents partial dependencies.

- Third Normal Form (3NF): Builds on 2NF by requiring that all attributes are dependent only on the primary key, not on other non-key attributes. This step eliminates transitive dependencies, where a non-key attribute determines another non-key attribute.

Example: Normalizing a Customer Order System

Imagine a single, unnormalized table tracking customer orders. A common interview approach is to present this and ask you to normalize it step-by-step.

Unnormalized Table: orders

(OrderID, ProductID, CustomerID, CustomerName, Product, Quantity, Price, CustomerCity)

- To achieve 1NF, we ensure all columns are atomic. The initial structure already meets this.

- To achieve 2NF, we address partial dependencies.

CustomerNameandCustomerCitydepend only onCustomerID, not the full composite key (OrderID,ProductID). We split this into separate tables.-- Customers Table (2NF)

CREATE TABLE Customers (

CustomerID INT PRIMARY KEY,

CustomerName VARCHAR(100),

CustomerCity VARCHAR(50)

);-- Orders Table (remains for order-specific data) - To achieve 3NF, we remove transitive dependencies. In the

productstable, aCategoryNamemight depend onCategoryID, which is not the primary key. This is a transitive dependency. We would create a separateCategoriestable. The goal is for every non-key attribute to provide a fact about "the key, the whole key, and nothing but the key."

This structured approach prevents data anomalies during insertion, updates, and deletion. While higher normal forms exist, 3NF is typically the standard for most applications, offering a practical balance between data integrity and performance. Understanding this trade-off is a key part of answering advanced SQL interview questions effectively.

6. Write a recursive CTE query to traverse hierarchical data

This is a classic advanced SQL interview question because it directly challenges your ability to handle non-tabular, hierarchical data structures within a relational database. It tests your mastery of Common Table Expressions (CTEs), specifically their recursive capabilities, which are essential for navigating parent-child relationships like organizational charts, product categories, or file systems. Answering this question well demonstrates that you can solve complex problems that go beyond simple joins and aggregations.

How It Works and When to Use It

A recursive CTE is a CTE that references itself. It consists of two main parts: an anchor member and a recursive member, combined with a UNION ALL. The anchor member is a base query that runs once and returns the starting point of the recursion (e.g., the CEO of a company). The recursive member then repeatedly executes, joining back to the CTE itself to find the next level of the hierarchy (e.g., direct reports) until it returns no more rows.

You should use a recursive CTE whenever you need to:

- Traverse a tree or graph structure of unknown or variable depth.

- "Explode" a bill of materials to find all component parts.

- Generate a full organizational hierarchy from an employee-manager relationship.

- Navigate a category tree to find all subcategories under a parent category.

Example: Finding an Employee's Management Chain

Consider an employees table with employee_id, employee_name, and manager_id. To find the entire management chain for a specific employee, you can use a recursive CTE.

WITH RECURSIVE EmployeeHierarchy AS (

-- Anchor Member: Start with the employee

SELECT

employee_id,

employee_name,

manager_id,

0 AS hierarchy_level

FROM

employees

WHERE

employee_name = 'David Smith'

UNION ALL

-- Recursive Member: Join back to find the next manager

SELECT

e.employee_id,

e.employee_name,

e.manager_id,

eh.hierarchy_level + 1

FROM

employees e

JOIN

EmployeeHierarchy eh ON e.employee_id = eh.manager_id

)

SELECT

employee_name,

hierarchy_level

FROM

EmployeeHierarchy;

This query starts with 'David Smith' and recursively joins the employees table to find his manager, then his manager's manager, and so on, until it reaches the top of the hierarchy (where manager_id is NULL). A crucial part of this query is the termination condition, which is implicit: the recursion stops when the JOIN in the recursive member finds no matching rows.

Mastering recursive CTEs is a clear indicator of advanced SQL proficiency. It shows you can think algorithmically and apply sophisticated SQL features to model and query complex, real-world data relationships effectively.

7. Explain ACID properties and demonstrate them with SQL transaction examples

This is a fundamental database theory question often posed in advanced SQL interviews. It moves beyond just writing queries to test your core understanding of how relational databases guarantee data integrity and reliability, especially in concurrent environments. Answering this well shows the interviewer you appreciate the principles that make databases trustworthy systems for critical applications like banking or e-commerce.

How They Work and When to Use Them

ACID is an acronym that stands for Atomicity, Consistency, Isolation, and Durability. These four properties are the bedrock of reliable transaction processing. A transaction is a sequence of operations performed as a single logical unit of work; ACID properties ensure that this unit of work is processed reliably.

- Atomicity: This property ensures that a transaction is an "all or nothing" operation. If any part of the transaction fails, the entire transaction is rolled back, and the database is left in the state it was in before the transaction began. Use this for multi-step operations like a bank transfer, where both the debit and credit must succeed together.

- Consistency: This guarantees that a transaction brings the database from one valid state to another. It ensures that any data written to the database must be valid according to all defined rules, including constraints, cascades, and triggers. Inventory updates are a perfect example; a transaction must not result in a negative stock count if that violates a business rule.

- Isolation: This property ensures that concurrently executing transactions do not interfere with each other. The intermediate state of a transaction is hidden from other transactions until it is complete. This is crucial for booking systems to prevent two users from booking the same seat simultaneously.

- Durability: This guarantees that once a transaction has been successfully committed, it will remain so, even in the event of a power loss, crash, or error. The changes are permanently recorded in the database.

Example: A Banking Transfer

Consider a simple bank transfer from Account A to Account B. This involves two separate UPDATE statements that must be treated as a single atomic unit.

BEGIN TRANSACTION;

-- Attempt to debit $100 from Account A

UPDATE accounts

SET balance = balance - 100

WHERE account_id = 'A';

-- Attempt to credit $100 to Account B

UPDATE accounts

SET balance = balance + 100

WHERE account_id = 'B';

-- If both operations succeed, commit the transaction

COMMIT;

-- Note: In a real application, you would add error handling to ROLLBACK

-- the transaction if either UPDATE fails.

In this example, Atomicity ensures both updates happen or neither does. Consistency is maintained by checks (not shown here) that prevent Account A’s balance from going negative. Isolation prevents another transaction from reading the balances mid-transfer, and Durability ensures the new balances are permanent once the COMMIT is executed. Understanding ACID is non-negotiable for anyone building reliable, data-driven applications.

Advanced SQL Interview Questions Comparison Matrix

| Topic / Aspect | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Explain difference between RANK(), DENSE_RANK(), ROW_NUMBER() | Medium - requires window function syntax | Moderate - can be memory-intensive on large data | Accurate ranking with tie-handling options | Analytical reports, ranking data by groups | Standardized, efficient ranking, flexible |

| Query to find second highest salary w/o LIMIT or TOP | Medium to High - multiple approaches available | Varies - can be performance-heavy without index | Finds specific ranked value without vendor hints | Interview tests, legacy system compatibility | Database-agnostic, tests SQL fundamentals |

| Explain EXISTS vs IN operators | Low to Medium - basic subquery understanding | Low - simple logical operations | Optimized existence/value checks | Filtering data by membership or existence | EXISTS efficient for large sets, IN readable |

| Query to identify and remove duplicates | Medium to High - careful delete logic needed | High - may lock tables, costly on large data | Clean data with duplicates removed | Data cleaning, ETL processes | Practical for maintaining data integrity |

| Explain database normalization and demonstrate 1NF-3NF | High - requires theoretical and practical knowledge | Moderate - complex joins may affect query speed | Well-structured, redundancy-free databases | Database design, data integrity enforcement | Reduces redundancy, improves consistency |

| Write recursive CTE for hierarchical data | High - recursive logic and termination needed | Moderate - heavy with deep hierarchies | Traverses parent-child hierarchies | Org charts, category trees, bill of materials | Handles unlimited depth, elegant tree traversal |

| Explain ACID properties with transaction examples | Medium - requires transaction understanding | Moderate - transaction overhead possible | Reliable, consistent concurrent data operations | Financial systems, complex multi-step transactions | Ensures data integrity, safe concurrency |

Your Next Steps to SQL Interview Success

Navigating the landscape of advanced SQL interview questions is less about rote memorization and more about demonstrating a deep, conceptual understanding of data manipulation, performance optimization, and database theory. The questions we've explored, from wielding window functions like RANK() and DENSE_RANK() to architecting recursive CTEs for hierarchical data, are designed to test your problem-solving process as much as your technical syntax.

The true goal of these interviews is to see how you think. Can you articulate the performance trade-offs between EXISTS and IN? Do you understand not just how to remove duplicates, but the potential data integrity risks involved? Can you explain the real-world importance of ACID properties beyond their textbook definitions? Answering these questions effectively proves you can be trusted with complex, mission-critical data systems.

Key Takeaways for Your Preparation

To truly internalize these concepts, move beyond simply re-reading the solutions. The path to mastery is through active, hands-on practice.

- Deconstruct the Logic: For each question, focus on the "why" behind the solution. Why is a subquery or a CTE the better choice for finding the Nth highest salary? Why is

ROW_NUMBER()the ideal tool for deduplication tasks? Understanding the fundamental logic makes your knowledge adaptable to any new problem you encounter. - Practice with Variations: Don't just solve for the "second highest salary." Challenge yourself to find the third, fourth, or Nth highest. Modify the recursive CTE example to handle a different organizational structure. This proactive practice builds mental flexibility and reinforces your core skills.

- Verbalize Your Solutions: A brilliant query is only half the battle; you must be able to explain it clearly. Practice walking through your code out loud, as if you were explaining it to an interviewer. This helps you identify gaps in your own understanding and builds confidence in your communication.

Charting Your Course to Success

Mastering these topics will undoubtedly set you apart, but remember that securing the interview is the crucial first step. Your resume must effectively communicate your technical prowess and value. To maximize your chances of getting a technical interview, understanding how to write a professional resume to get more interviews is essential for showcasing your skills before you even write a single line of code.

By combining deep, practical SQL knowledge with a powerful professional narrative, you create an unstoppable combination. Continue to challenge yourself with new problems, stay curious about database internals, and approach every interview as an opportunity to demonstrate your expertise. With diligent preparation, you won't just answer the questions; you'll lead a technical discussion that leaves a lasting, positive impression.

Ready to take your interview preparation to the next level? ParakeetAI is an AI-powered assistant that provides real-time, tailored guidance during your practice sessions on platforms like LeetCode and HackerRank. It helps you articulate complex solutions with confidence, ensuring you’re fully prepared to impress on interview day. Visit ParakeetAI to see how it can become your secret weapon for landing your dream tech role.